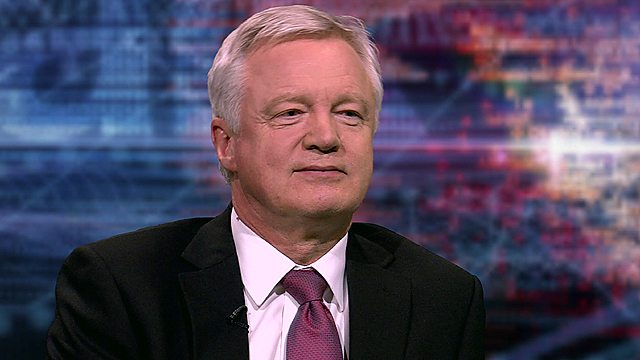

David Davis - Conservative Member of Parliament, UK HARDtalk

Similar Content

Browse content similar to David Davis - Conservative Member of Parliament, UK. Check below for episodes and series from the same categories and more!

Transcript

| Line | From | To | |

|---|---|---|---|

reviewed. -- that three convict did. Those are the headlines. Now it is | :00:06. | :00:16. | |

| :00:16. | :00:20. | ||

time for HARDtalk. My guess today has been a candidate for the leader | :00:20. | :00:24. | |

of the British Conservative party. He has also made a name for himself | :00:24. | :00:28. | |

as a civil liberties campaigner, arguing against what is sometimes | :00:28. | :00:31. | |

called as the surveillance state. What does he make of the massive | :00:31. | :00:37. | |

collection of data by the NSA and also GCHQ, revealed by the American | :00:37. | :00:44. | |

whistleblower, Edward Snowden. In the years since the 9/11 attacks, | :00:44. | :00:54. | |

| :00:54. | :01:11. | ||

have we got the balance wrong between liberty and security? | :01:11. | :01:17. | |

David Davis, welcome to HARDtalk. Were you surprised by the scale of | :01:17. | :01:21. | |

the revelations by Edward Snowden, the piles and piles of stuff that | :01:21. | :01:28. | |

was collected by the Americans and the British? Not really. I am | :01:28. | :01:34. | |

surprised at the compliance of Google and Yahoo! . But I was not | :01:34. | :01:40. | |

surprised by the fact that it goes on. When you recruit spooks, you | :01:40. | :01:47. | |

recruit people who go to the age in what they see as national interest. | :01:47. | :01:50. | |

Let's begin where you begin on this. There is nothing wrong with | :01:50. | :01:57. | |

spying. Nothing wrong, as long as it is properly targeted. And there is | :01:57. | :02:01. | |

nothing wrong with using the best technology, and if people are | :02:01. | :02:04. | |

communicating by email and other means, that has to be looked at. | :02:04. | :02:12. | |

Absolutely. The problem is in the law. What was done, it looks as | :02:12. | :02:20. | |

though it was legal. Certainly under American law. Because they treat | :02:20. | :02:26. | |

American citizens very different to everybody else. You and I might as | :02:26. | :02:30. | |

well be in North Korea is as far as American law is concerned. That is | :02:30. | :02:36. | |

not surprising in that respect. The other aspect is British law. GCHQ, | :02:36. | :02:42. | |

appears to have said, to the Americans, the opposite of what they | :02:42. | :02:47. | |

have been saying to parliamentary committees. They say that British | :02:47. | :02:52. | |

surveillance is very light touch. It is very un- risk of deep will stop | :02:52. | :02:56. | |

that is very important. They had exploited the law to its limits, | :02:56. | :03:03. | |

what we are now discovering is what it means for ordinary citizens. | :03:03. | :03:11. | |

is the government Communications headquarters. But prison and | :03:11. | :03:16. | |

tempura, the codenames for these operations, helped prevent more than | :03:16. | :03:21. | |

50 potential terrorist attacks. It is a narrow system directed at us | :03:21. | :03:26. | |

being able to protect our people, all of it is done under the | :03:26. | :03:30. | |

oversight of the courts. He says it is within the law and we have saved | :03:30. | :03:36. | |

-- safeguards. They are talking about American citizens, not you and | :03:36. | :03:46. | |

| :03:46. | :03:48. | ||

me. But there is also the standard deception. That this gathering, | :03:48. | :03:53. | |

let's say, of 300 million pieces of information, has helped to stop | :03:53. | :04:03. | |

these five, ten, 50 crimes. But you can stop those 50 crimes by a much | :04:03. | :04:10. | |

more narrow investigation. For example, not impinging on our | :04:10. | :04:15. | |

progressive. They have gone in this really crude way of sweeping | :04:15. | :04:22. | |

everything up and it does not deliver the best security. Coming on | :04:22. | :04:27. | |

to Britain, the Foreign Secretary, he has also been very clear. He says | :04:27. | :04:31. | |

that if you are a law-abiding citizen you have nothing to fear | :04:32. | :04:34. | |

about intelligence agencies listening to the of your phone | :04:34. | :04:41. | |

calls. Tell that to Doreen Lawrence. The mother of Stephen Lawrence, the | :04:41. | :04:46. | |

young man who was murdered, there was a very aborted police enquiry, | :04:46. | :04:50. | |

at one stage they started to look on whether they could get dirt on her. | :04:50. | :05:00. | |

It is not the only example. Americans, you had Clinton holding | :05:00. | :05:05. | |

FBI files. Governments, even democratic governments, or their | :05:05. | :05:08. | |

bureaucracies, they misuse power was if they are allowed to. | :05:08. | :05:14. | |

You may be out of tight on which British citizens feel about it. If | :05:14. | :05:22. | |

you are asked to balance security and privacy, nine -- 42% put | :05:22. | :05:29. | |

security ahead of privacy. Forgive me, it is a dumb question. We prefer | :05:29. | :05:35. | |

it to be secure. They do if it thinks it applies to them. Most | :05:35. | :05:39. | |

people think they do not apply to me, my emails are not being looked | :05:39. | :05:46. | |

at or whatever. There are through -- two deceptions in the question. | :05:46. | :05:51. | |

Number one is that there is a trade-off. Remember what happened in | :05:51. | :05:56. | |

the 7th of July, with the attack and 50 people being killed. Horrible | :05:56. | :06:03. | |

crime. Those criminals, to arrest as -- terror arrests if you want to | :06:03. | :06:06. | |

call them that, they were known to MI5 and the police, they rebel | :06:06. | :06:12. | |

recorded plotting actions against the state, they had their addresses | :06:12. | :06:17. | |

but they did nothing about it. Why, because they had so much information | :06:17. | :06:22. | |

they could not deal with it. What we are doing is creating vast | :06:22. | :06:26. | |

quantities of information, most of what is incredibly useless, but puts | :06:26. | :06:33. | |

our privacy at risk. But you do axe at what President Obama is saying, | :06:33. | :06:38. | |

that this does save lives. -- except. It could he done more | :06:38. | :06:43. | |

effectively. It could be done better with less. This is using a shotgun | :06:43. | :06:49. | |

when a rifle could do. This is not focusing sufficiently on the people | :06:49. | :06:53. | |

who should be targeted very closely, and using a great Hoover to pull up | :06:53. | :06:59. | |

everything. You have said since 9/11 and 77 lakh, that we have lost a | :06:59. | :07:06. | |

sense of balance. Presumably before we did it with different technology. | :07:06. | :07:11. | |

Even 9/11, most of us have not read the commission report, which led to | :07:11. | :07:16. | |

the departure of the head of the CIA. One, because they were not | :07:16. | :07:23. | |

using the information at hand. The people involved, some of them | :07:23. | :07:27. | |

involved in the 9/11 planning, they were in the United States and known | :07:27. | :07:32. | |

to the CIA and nothing was done to it. Warnings were ignored. The issue | :07:32. | :07:35. | |

is focusing and using the information that you have got, not | :07:35. | :07:43. | |

going out to harvest everyone. I have no objection, if you are a | :07:43. | :07:47. | |

spook, and you think there is a real cause for investigating somebody, | :07:48. | :07:54. | |

getting a judge, or a magistrate, to say, yes, you can do that, go and do | :07:54. | :07:58. | |

it. I have an objection of being able to do it willy-nilly with any | :07:58. | :08:06. | |

citizen. But you do not look for a needle in the haystack by making a | :08:06. | :08:11. | |

bigger haystack. That is your argument. But to focus on the | :08:11. | :08:14. | |

legality of it, it you are very clear that we are foreigners under | :08:14. | :08:18. | |

US law, but foreign hack -- William Hague says that intelligence | :08:18. | :08:22. | |

gathering here is governed by a strong legal framework. You except | :08:22. | :08:32. | |

that? I do not. One element that we know about, that we were told about, | :08:32. | :08:39. | |

is that whole blocks of information, from British residents, | :08:39. | :08:45. | |

and citizens when they go abroad, that would include me if I email you | :08:45. | :08:48. | |

via Google. Whole blocks of that data are made available to the | :08:48. | :08:54. | |

Americans on an unlisted did bases. They do not check to see if we are | :08:54. | :09:00. | |

terrorist, they just take everything. That can be done legal | :09:00. | :09:04. | |

-- legally under an authorisation from the Secretary of State. But I | :09:04. | :09:08. | |

was involved in writing those laws, I was a minister that took the bill | :09:08. | :09:15. | |

through the House of Commons. The MI6 bill. We did not countenance | :09:15. | :09:25. | |

this when I -- we created these options. We were talking at about | :09:25. | :09:30. | |

bugging, not the skewed sweeps of data. It is not loophole, that it is | :09:30. | :09:40. | |

| :09:40. | :09:41. | ||

a tunnel right through the law. One controversial example. I as a member | :09:41. | :09:45. | |

of Parliament cannot have my communications intercepted, phone or | :09:45. | :09:48. | |

anything else, without the explicit approval of the Prime Minister. But | :09:48. | :09:55. | |

that goes right around it. You say it is a tunnel that goes through the | :09:55. | :10:01. | |

law. The Foreign Secretary disagrees with you. He says it is authorised, | :10:01. | :10:07. | |

it necessary, proportionate and targeted. It is not just him. The | :10:07. | :10:10. | |

Minister under the Labour government says it is fanciful to think that | :10:10. | :10:20. | |

GCHQ are working at ways of circumventing our laws. They do not | :10:20. | :10:23. | |

need to circumvent our laws. The problem is that the laws are too | :10:23. | :10:28. | |

weak. William Hague was right about one thing, it is legal. | :10:28. | :10:32. | |

Proportionate, I do not think so. Not the entire Internet traffic | :10:32. | :10:40. | |

going through this country. about necessary? Not necessary, | :10:40. | :10:44. | |

because there are better ways of doing it. We come back to focusing | :10:44. | :10:51. | |

on the actual targets. Most of what they do, what is done by the NSA, | :10:51. | :10:59. | |

they are finding communications to a known terrorist any rock, and in a | :10:59. | :11:04. | |

western country, and tracking from that radiating network. That sort of | :11:04. | :11:11. | |

thing is very if it did. I do not mind if they have access to the | :11:11. | :11:15. | |

entire traffic if they are carrying that out, if it is a properly | :11:15. | :11:21. | |

controlled exercise. It is far more manpower effective and it works | :11:21. | :11:30. | |

nearly all the time. What we did any right to eradicate Al-Qaeda. What | :11:30. | :11:35. | |

would you do? Would you redraw the law to make it sharper, you are also | :11:35. | :11:40. | |

telling people to look at smaller haystacks. The French, they do | :11:40. | :11:47. | |

60,000 intercepts per year of the sort that we are talking about. They | :11:47. | :11:51. | |

are targeted on my whole series of people that they think are high | :11:51. | :11:56. | |

risk. Not terror arrest, but high risk people. That is under a | :11:57. | :12:00. | |

magistrate's view. The Germans, they have a system which is incredibly | :12:00. | :12:06. | |

fierce in terms of risk in people. But I do not notice any of those | :12:06. | :12:12. | |

having a worse terrorist problem than we do. You are also known as | :12:12. | :12:17. | |

the greatest -- not the greatest person in favour of the European | :12:17. | :12:23. | |

Union so I am smiling at this. That in terms of the French and Germans | :12:23. | :12:31. | |

doing their snooping, do they do it better than us? I think they do. | :12:31. | :12:35. | |

Broadly speaking, in terms of domestic, control of domestic | :12:35. | :12:39. | |

surveillance, I think both of them do that better than us. They are | :12:39. | :12:44. | |

both major countries. These are not hillbillies, we are talking about | :12:45. | :12:50. | |

real major countries. Can I make one point which is different between us | :12:50. | :12:55. | |

and Germany and France, and may explain part of the difference. When | :12:55. | :12:59. | |

we were at the end of empire, post-1945, allies with the | :12:59. | :13:07. | |

Americans, we had a trade which was world-famous. The predecessor of | :13:07. | :13:16. | |

GCHQ. It was a more salient player than GCHQ. When we went on from | :13:16. | :13:20. | |

there, we had a number chip in the game when dealing with the American | :13:20. | :13:28. | |

NSA. We had big listening posts in Hong Kong and Cyprus, which gave us | :13:28. | :13:34. | |

a lot of traffic that the Americans could not get at. That was very | :13:34. | :13:38. | |

important to us in maintaining the intelligence relationship will stop | :13:38. | :13:46. | |

it is the closest in the world between Britain and America. Now, | :13:46. | :13:50. | |

what this may be, it is like a similar sort of chip in the game, | :13:50. | :13:54. | |

allowing the Americans to Hoover through what is this huge amount of | :13:54. | :13:58. | |

Internet traffic, maybe our chip. The replacement for Hong Kong and | :13:58. | :14:05. | |

Cyprus. In terms of Edward Snowden himself, do you see him as a | :14:05. | :14:10. | |

traitor, which many people in the US appeared to do, or do you see him as | :14:10. | :14:13. | |

somebody who has alerted us to the great danger to our civil liberties | :14:13. | :14:19. | |

and should he be offered political asylum? I do not see him as a | :14:19. | :14:23. | |

traitor. The Americans get three heavy-handed about these things. I | :14:23. | :14:27. | |

do see him as a whistleblower that there is no doubt that he has broken | :14:27. | :14:31. | |

the law and the contracts that he has taken when he went to work with | :14:31. | :14:36. | |

those agencies. If he was a British citizen, I think he should face some | :14:36. | :14:40. | |

kind of recourse, that it should be offset by the public interest. | :14:40. | :14:48. | |

the big pit, there is no such thing as privacy? It is not the states, it | :14:48. | :14:53. | |

is the supermarkets. They can find out what you are doing. CCTV. | :14:53. | :14:58. | |

Journalists who can bug your phone. But there has been a scandal about | :14:58. | :15:08. | |

| :15:08. | :15:18. | ||

we find out about this. Somewhat has been in the news recently. A member | :15:18. | :15:28. | |

| :15:28. | :15:29. | ||

been in the news to irregularity. Why did he go into such a big fight | :15:29. | :15:32. | |

with the press? Because he believed that they intrude on his privacy, | :15:32. | :15:37. | |

and that broke his family. That is just one example. I could give you | :15:37. | :15:45. | |

others. Examples of the miss use, and this is not just the state. Big | :15:45. | :15:50. | |

corporations and bureaucracies tend to ignore the rules that they think | :15:50. | :15:56. | |

should not impinge on them. There is real harm done. I was interested in | :15:56. | :16:00. | |

what you said about Germany and France. Some of the things you said | :16:00. | :16:04. | |

having a code very strongly by the Europeans, because they have been | :16:04. | :16:10. | |

bogged by the Americans. I will never say this again on your | :16:10. | :16:17. | |

programme and I haven't before, but I think this might be one place or | :16:17. | :16:24. | |

the European Union is the answer. We could, but historically we have not | :16:24. | :16:28. | |

been -- we have been placid in this area with the Americans. It is not | :16:28. | :16:32. | |

in our nature. I think the Europeans will actually be cross enough to | :16:32. | :16:36. | |

say, I'm sorry, but you have to think about more about people who | :16:36. | :16:41. | |

are not citizens of the US. Citizens in the US have absolute privacy | :16:41. | :16:47. | |

rights, but nobody else does. think the European Union might end | :16:47. | :16:51. | |

up reflecting our privacy better than we are doing at the moment? | :16:51. | :16:57. | |

Well, it could hardly be less. viewers around the world who don't | :16:57. | :17:03. | |

know your track record on the EU, that is an extraordinary statement. | :17:03. | :17:08. | |

Yes, someone is sitting there in shock. Civil liberties campaigners | :17:08. | :17:12. | |

in this country who you have worked with on other matters believe that | :17:13. | :17:17. | |

their information might have been unlawfully accessed by GCHQ and | :17:17. | :17:26. | |

their security services. Under Article eight of the Human Rights | :17:26. | :17:36. | |

| :17:36. | :17:41. | ||

Act, the right to privacy cock. -- privacy, which despite the fact that | :17:41. | :17:51. | |

you have been against the Human Rights Act you might agree with. | :17:51. | :17:59. | |

There are some parts of the Right -- Human Rights Act that I do not agree | :17:59. | :18:06. | |

with. But this, yes. My name might come up on the American list, I have | :18:06. | :18:12. | |

disagreed with things they said. And that's all information is handed | :18:12. | :18:19. | |

over to them every day. Theresa May has said that we must also | :18:19. | :18:23. | |

reconsider our relationship with the European Court, and that withdrawal | :18:23. | :18:32. | |

should remain on the table. I am not with her on that. There may well be | :18:32. | :18:36. | |

something to talk about there, but the problem here is quite simple. | :18:36. | :18:39. | |

The original convention which we signed in the early 50s was quite | :18:39. | :18:49. | |

| :18:49. | :18:51. | ||

tightly drawn, and the court has gone beyond it. It has a doctrine | :18:51. | :18:54. | |

called the living instrument doctrine, which means they | :18:54. | :18:59. | |

interpreted in the light of everyday life. As we speak, they have come | :18:59. | :19:04. | |

out and suggested that we might no longer send people to prison for | :19:04. | :19:08. | |

their entire lives, that we might hold open the option of letting them | :19:08. | :19:14. | |

out. That sort of thing is way beyond their remit. It is beyond | :19:14. | :19:18. | |

what was intended by this the founders of the European Convention. | :19:19. | :19:22. | |

We should pull it back to the original aim, which was to stop | :19:22. | :19:29. | |

torture, murder, crimes by the state and improper imprisonment. Those | :19:29. | :19:35. | |

with a big things. So absolutely understand this, you do not think we | :19:35. | :19:39. | |

should withdraw from the Convention on human rights, but you do think we | :19:39. | :19:43. | |

back back to its roots. Perhaps just | :19:43. | :19:53. | |

| :19:53. | :19:53. | ||

derogate little bits of it. Which remember off the top of my head the | :19:53. | :19:56. | |

individual clauses, but some of the more about interpretation rather | :19:56. | :20:04. | |

than the wording. But the bits I am concerned about are things like | :20:04. | :20:08. | |

giving prisoners votes, that is our business. If we want to give them | :20:08. | :20:13. | |

votes we can. That is for us to decide, not the Europeans. If we | :20:13. | :20:16. | |

want to say somebody should go to prison forever because he is killed | :20:16. | :20:21. | |

his entire family or a whole series of other pe?I ? of other pe we | :20:21. | :20:25. | |

are talking about. We should be able say that it is not for the court to | :20:26. | :20:31. | |

tell Parliament what to do about that. Let's bring us back to some | :20:31. | :20:36. | |

domestic disclosures, particularly UKIP. You have been writing some | :20:36. | :20:41. | |

interesting things about that, suggesting in effect that there is | :20:41. | :20:44. | |

something to do with the relationship between David Cameron | :20:44. | :20:50. | |

and ordinary people which is not working. Classes always | :20:50. | :20:54. | |

characterised, but what is really about is people feeling that we are | :20:54. | :21:03. | |

out of touch with them. It's not We are out of touch. There was a | :21:03. | :21:07. | |

survey in the last few days which said that the majority of the party | :21:07. | :21:15. | |

felt that we did not respect them. That is really serious. I think it | :21:15. | :21:22. | |

party membership. That is one of the reasons UKIP have done very well in | :21:22. | :21:29. | |

the last local elections. People were saying, I was at the polling | :21:29. | :21:38. | |

station, and people were saying that they're going to vote for UKIP | :21:38. | :21:44. | |

because of the language that we were using. Swivel eyed loons if I recall | :21:44. | :21:54. | |

| :21:54. | :21:55. | ||

correctly. Is it puzzle you that there are so many inner circle of | :21:55. | :22:04. | |

David Cameron. When I was in business, they used to be rules | :22:04. | :22:09. | |

about individuals recruiting people just like himself. We all do it, I | :22:09. | :22:12. | |

would be just as likely to recruit ex- working-class grammar | :22:12. | :22:19. | |

schoolboys. I have said to him, look, I don't care if you are all | :22:19. | :22:22. | |

Nobel Prize winners in physics. If you are all the same, it makes you | :22:22. | :22:32. | |

| :22:32. | :22:33. | ||

blind in some areas. And voters see that labour is. What is UKIP? They | :22:33. | :22:37. | |

are sort of a primary colours concern. It is a caricature of the | :22:37. | :22:47. | |

| :22:47. | :22:56. | ||

80s that your party. It is no -- a nice simple message to get across. | :22:56. | :22:59. | |

Maybe one of David Cameron is obvious answers to this is to | :22:59. | :23:06. | |

promote someone with a working-class background, are they -- grammar | :23:06. | :23:16. | |

| :23:16. | :23:17. | ||

schoolboy... I see what you are getting at. But that sort of person. | :23:17. | :23:21. | |

There are plenty of people in our benches from a low background, very | :23:21. | :23:27. | |

capable, then maybe he should think about. We do worry about the number | :23:27. | :23:29. | |

of women and ethnic minorities. Perhaps we should worry about the | :23:29. | :23:34. | |

number of people with the ordinary public would identify with. That is | :23:34. | :23:42. |